This paper proposes a novel pipeline to estimate a non-parametric environment map with high dynamic range from a single human face image.

Lighting-independent and -dependent intrinsic images of the face are first estimated separately in a cascaded network. The influence of face geometry on the two lighting-dependent intrinsics, diffuse shading and specular reflection, are further eliminated by distributing the intrinsics pixel-wise onto spherical representations using the surface normal as indices. This results in two representations simulating images of a diffuse sphere and a glossy sphere under the input scene lighting. Taking into account the distinctive nature of light sources and ambient terms, we further introduce a two-stage lighting estimator to predict both accurate and realistic lighting from these two representations. Our model is trained supervisedly on a large-scale and high-quality synthetic face image dataset.

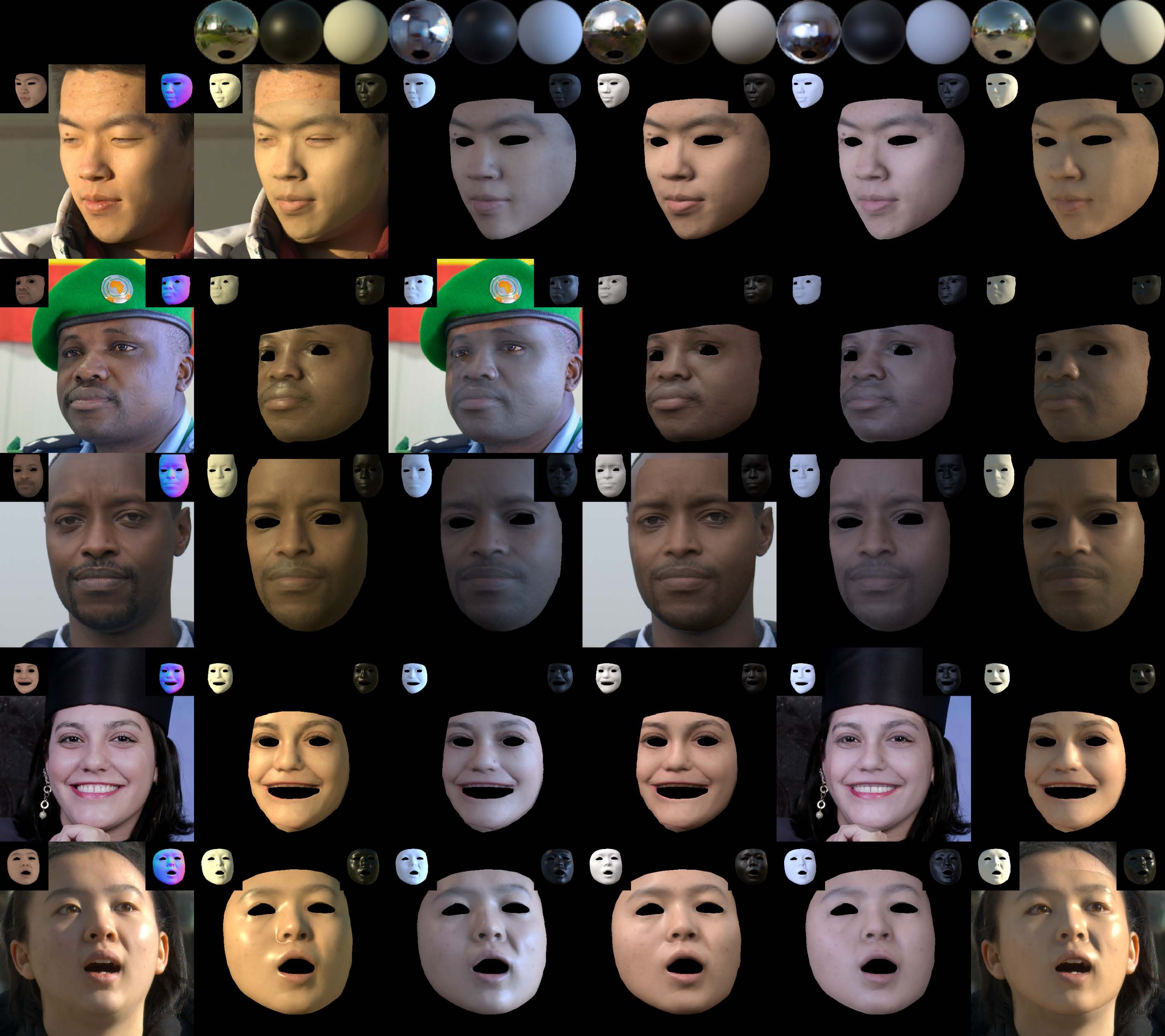

We demonstrate that our method allows accurate and detailed lighting estimation and intrinsic decomposition, outperforming state-of-the-art methods both qualitatively and quantitatively on real face images.

The pipeline of SPLiT. The tetrad intrinsic decomposition module predicts the tetrad of face intrinsics $\{ \mathbf{A}, \mathbf{N}, \mathbf{D}, \mathbf{S} \}$ from the input image $\mathbf{I}$ by cascaded networks, $f_\mathrm{AN}$ and $f_\mathrm{DS}$. The lighting-dependent shading $\{ \mathbf{D}, \mathbf{S} \}$ are distributed onto spherical representations $\{ \mathbf{D}^\circ, \mathbf{S}^\circ \}$ using $\mathbf{N}$ as per-pixel indices. The spherical lighting estimation module takes $\{ \mathbf{D}^\circ, \mathbf{S}^\circ \}$ and the constant normal map $\mathbf{N}^\circ$ as input. Taking into account the different nature of HDR light sources and ambient lights, it first estimates light sources and the ambient lights by the light source network $f_\mathrm{src}$, then enriches realistic textures into the ambient environment by the ambient texture network $f_\mathrm{amb}$ and the discriminator $f_\mathrm{disc}$ via a generative adversarial network (GAN) framework.

SPLiT is trained and validated on a large-scale and high-quality synthetic face image dataset containing labels of intrinsic components and lighting. This dataset is built on the FaceScape 3D face dataset.

Comparisons are conducted on a test dataset containing real face images and corresponding ground-truth environment lighting, including Laval Face&Lighting database and our newly captured dataset.

Relighting virtual objects for realistic insertion using the estimated lighting.

Lighting transfer between portraits using the estimated lighting and face intrinsic components.

@article{split2023,

author = {Fan Fei and Yean Cheng and Yongjie Zhu and Qian Zheng and Si Li and Gang Pan and Boxin Shi},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

title = {SPLiT: Single Portrait Lighting Estimation via a Tetrad of Face Intrinsics},

year = {2024},

volume = {46},

number = {02},

issn = {1939-3539},

pages = {1079-1092},

doi = {10.1109/TPAMI.2023.3328453},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

month = {feb}

}